This guide on “How to measure sales training effectiveness” helps organizations evaluate whether programs improve skills, change behavior, and deliver measurable business results tied to sales performance.

- Identify knowledge retention and engagement through early learning indicators

- Track behavioral adoption in real-world sales situations for impact validation

- Connect sales training programs to revenue growth, win rates, and customer satisfaction outcomes

- Use ROI models and continuous evaluation to align sales training programs with business goals

Your sales team had just finished a two-day training workshop. The room buzzed with energy, managers praised the content, and the post-session surveys came back glowing. Fast forward three months, and nothing had changed. Deals were still stalling, revenue numbers were flat, and new reps continued to struggle on calls. What looked like a win on paper failed to show up in the pipeline.

The problem is that most organizations don’t measure sales training beyond the first few weeks or after new rep onboarding. They treat it as a one-off event rather than a cyclical process that requires reinforcement and continuous evaluation.

According to the findings of a Forrester Research 2025, “Sales training can’t be 'one and done.” Sales knowledge transfer and the mastery of competencies are too critical to be relegated to a single half-day classroom session. Top training vendors follow a comprehensive learning strategy that includes long-term reinforcement and equipping sales coaches to sustain the change.”

This is where many companies stumble. Sales training effectiveness gets measured by how engaging it felt, not by whether it actually moved the needle on performance. Without evidence of real business impact, leaders are left guessing if their investment worked.

That’s why understanding how to measure sales training effectiveness is so critical. It’s about proving whether training translates into better sales conversations, higher win rates, and meaningful outcomes for the business.

In this blog, we’ll explore proven frameworks, practical metrics, and step-by-step methods to evaluate training impact and uncover the common pitfalls to avoid along the way.

What is Sales Training Effectiveness?

Sales training effectiveness is the measure of how well a training program improves sales skills, knowledge, and behaviors to drive business outcomes. It evaluates whether your sales teams apply learned techniques in real sales interactions, achieve higher win rates, and contribute to revenue growth.

Effectiveness is assessed using metrics such as team performance, engagement, knowledge retention, quota attainment, and ROI. The goal is to connect training activities with measurable improvements in performance, productivity, and business outcomes, ensuring that programs deliver tangible value to both sales teams and the organization.

In short, sales training effectiveness answers three critical questions:

- Did the training equip reps with new knowledge and skills?

- Are those skills being applied in the field?

- Has this application led to tangible improvements in sales and business outcomes?

Frameworks For Evaluating Sales Training Effectiveness

Measuring training effectiveness is often challenging because organizations tend to rely on surface-level feedback. Employees might say they enjoyed the session, but without a structured framework, leaders cannot know whether those sessions improved skills, influenced sales behaviors, or contributed to business outcomes.

Established evaluation models turn abstract training goals into measurable results that matter to both sales teams and executives.

Kirkpatrick Evaluation Model

The Kirkpatrick model is one of the most widely adopted approaches for training evaluation. Its four levels create a logical progression from learner experience to organizational impact.

- Reaction: This measures the immediate response of participants. Did they find the training relevant, engaging, and useful? Many organizations collect this data through surveys at the end of workshops or e-learning modules. While important, this level only shows perceived value, not actual impact.

- Learning: At this stage, the focus shifts to knowledge and skill acquisition. Were salespeople able to demonstrate that they learned new techniques, product knowledge, or communication strategies? Assessments, quizzes, or role-play exercises are typically used to gather this data.

- Behavior: This is where many training programs struggle. The key question is whether learners apply what they’ve learned in real selling situations. Monitoring CRM entries, call recordings, and manager observations helps verify whether sales reps are adopting new practices, such as improved objection handling or better qualification questioning.

- Results: The final level evaluates business outcomes tied to training. Did the program contribute to higher close rates, larger deal sizes, or shorter sales cycles? This is the level executives care about most, because it links learning directly to organizational performance.

Many companies stop measuring at the first two levels, focusing only on satisfaction surveys and test scores. The deeper business case emerges when companies follow through to levels three and four, where actual behavior and results are measured.

Phillips ROI Model

Jack Phillips extended the Kirkpatrick framework by introducing a fifth level: Return on Investment (ROI). The Phillips model addresses a frequent executive concern: even if training improves performance, is the financial return worth the cost?

The ROI model compares the monetary benefits of training with its total costs. These benefits might come from higher revenue, reduced turnover, or improved productivity. By converting outcomes into dollar values, organizations can present a clear financial justification for training investments.

For example, if sales training enhances negotiation skills, the measurable outcome could be a consistent increase in deal size across the team. When those gains are aggregated and compared to the program cost, leadership has a data-backed case for continuing or expanding the initiative.

Extended Models

While Kirkpatrick and Phillips remain dominant, extended frameworks and modern analytics offer more nuanced evaluation.

Kaufman’s Model

Kaufman’s model builds on Kirkpatrick by adding layers of analysis, particularly around inputs, processes, and external value. This model not only assesses whether salespeople learned and applied skills but also whether the training process itself was efficient and whether it created value for external stakeholders such as customers.

For sales teams, this might mean evaluating whether better-trained reps are improving customer satisfaction scores or building stronger long-term relationships.

Modern Analytics (xAPI)

The Experience API (xAPI), also known as Tin Can API, is a modern learning technology standard that tracks and records detailed learning experiences across platforms. Unlike older standards such as SCORM, which only log course completions, xAPI captures what a learner does, where they do it, and how they engage.

For example, if a salesperson reviews a product playbook before a client meeting or participates in a mobile simulation on objection handling, xAPI records these activities in a Learning Record Store (LRS). Over time, this creates a rich dataset that links learning activities with real-world performance outcomes.

Organizations that use xAPI and learning record stores (LRS) gain more granular visibility into how sales training translates into day-to-day behavior. This is particularly valuable in hybrid or digital-first sales environments, where much of the learning happens outside formal classrooms.

By applying models such as Kirkpatrick, Phillips, Kaufman, and xAPI-driven analytics, sales leaders can build a comprehensive picture of effectiveness and continuously improve their programs.

Metrics To Measure Sales Training Effectiveness

Frameworks such as Kirkpatrick or Phillips help define what to evaluate, but metrics provide the concrete evidence leaders need to judge whether training is making an impact.

Lead Indicators

Lead indicators capture the immediate outcomes of training and help organizations understand whether learning is being absorbed. They are especially important because they act as predictors of long-term success. Common lead indicators include:

- Completion rates reflect whether participants engaged with the full program.

- Assessment scores that demonstrate knowledge retention of product features or selling frameworks.

- Simulation or role-play performance that indicates whether reps can apply skills in a controlled environment.

- Learning management system (LMS) activity data that shows frequency and consistency of training engagement.

These measures highlight early adoption but also surface warning signs. For example, if reps consistently pass quizzes but perform poorly in role-plays, it suggests knowledge is not translating into applied skill.

Lag Indicators

Lag indicators reflect the ultimate business outcomes of training, often several months after implementation. They demonstrate whether training has shifted core organizational priorities, such as revenue generation and deal velocity. Key lag indicators include:

- Increase in overall or per-rep revenue contribution.

- Improvements in win/loss ratios across opportunities.

- Reductions in sales cycle length indicating faster deal progression.

- Higher quota attainment rates across the team.

These indicators tie training to tangible business performance, which executives use to judge ROI. Because training outcomes often take months to materialize and lagging measures help capture the true impact over the period.

In fact, a Forrester Total Economic Impact study showed that organizations using Lessonly realized a 124% ROI over three years, highlighting how consistent measurement of lagging indicators, long after the initial sessions, reveals the real business value of training and enablement.

Qualitative Feedback

Metrics alone rarely explain why training succeeds or fails. Qualitative feedback provides essential context and helps identify barriers to application. Useful forms of qualitative data include:

- Post-training surveys that capture participant confidence and perceived relevance.

- Manager observations during coaching sessions or call reviews assess whether skills are used under real conditions.

- Customer feedback, such as satisfaction surveys or Net Promoter Score (NPS) ratings that reveals changes in buyer experience.

This feedback highlights gaps between knowledge and behavior. For example, if win rates remain flat despite strong participation, manager observations may uncover that reps lack confidence to use new techniques during negotiations.

By combining quantitative outcomes with qualitative context, leaders can pinpoint whether the issue lies in training design, coaching reinforcement, or market dynamics.

Productivity Metrics

Productivity-focused metrics are particularly valuable for organizations with large or growing sales teams. They show how training affects efficiency, onboarding, and retention. Key measures include:

- Ramp-up time, or the number of months it takes new hires to reach full productivity. Shorter ramp-up times reflect effective onboarding and faster revenue contribution.

- Quota attainment rates, which show the percentage of the team consistently meeting or exceeding targets.

- Turnover rates, where reduced attrition signals that training has improved rep confidence, job satisfaction, and career growth.

By tracking productivity alongside engagement and outcomes, companies can see not only whether training improves performance but also whether it strengthens the overall health of the sales organization.

Relying on a single category of metrics creates blind spots. Lead indicators alone may exaggerate success, while lag indicators often come too late to adjust course. Qualitative feedback adds depth but cannot stand on its own without measurable data.

Productivity measures reveal organizational impact but require context from other categories to isolate training’s role. A balanced approach that combines all four ensures training effectiveness is captured at multiple stages, from immediate adoption to long-term financial results.

Step-By-Step Guide To Measuring Sales Training Effectiveness

A structured process ensures that measurement is not an afterthought but a deliberate part of the training strategy. The following steps provide a roadmap for building a reliable evaluation system that connects training activity to business performance.

Pre-Training: Stakeholder Involvement And Alignment

Without alignment, even the most well-designed program risks missing the mark. Involving stakeholders early, such as executives, frontline managers, HR, and sales enablement leaders, ensures that the training process supports business goals rather than generic learning objectives.

This stage clarifies which outcomes matter most. For example, a leadership team might prioritize faster new-hire ramp-up, while sales managers focus on improving objection handling.

When these perspectives are aligned, the training program gains relevance, and the key performance indicators become easier to measure later. When stakeholders help shape goals, they are more likely to support post-training reinforcement and accountability.

Step 1: Define Objectives And Align KPIs

A common mistake in training programs is setting vague goals such as “improve selling skills.” These cannot be measured meaningfully. Instead, objectives should be specific, measurable, and tied to business outcomes. For instance, a well-structured objective might be to “increase average deal size by 15% in the next two quarters.”

The next step is translating objectives into KPIs that are easy to track. These may include metrics such as quota attainment, win/loss ratios, or call quality scores. The key is ensuring that KPIs reflect both learning adoption and business performance.

Step 2: Collect Baseline Sales Data

Measurement is only possible when you know your starting point. Baseline data establishes the reference for evaluating progress. Collecting this data may involve analyzing CRM records for current win rates, reviewing call recordings for sales behaviors, or surveying customers to capture satisfaction levels before training.

Without baseline data, organizations cannot distinguish between performance gains driven by training and those caused by external factors such as market shifts or new product launches. Establishing benchmarks creates clarity and strengthens the credibility of the training evaluation.

Step 3: Track Learning And Engagement Metrics

Once training begins, early indicators provide insight into how well participants are engaging with the material. Learning management system reports, quiz results, and participation in role-plays can highlight whether salespeople are retaining knowledge.

Engagement metrics such as completion rates or voluntary participation in extra modules also signal the level of commitment from learners. Tracking these indicators helps identify gaps quickly.

For example, if assessment scores are high but simulation performance is weak, it suggests that reps understand the theory but cannot yet apply it. Addressing such gaps during or shortly after training increases the chances that learning will transfer to the field.

Step 4: Measure On-The-Job Behavior Changes

The most critical question is whether training changes how salespeople perform in real situations. Monitoring behavior requires a combination of tools and observations. CRM activity can reveal whether reps are adopting new sales methodologies, while call recordings allow managers to assess improvements in objection handling or negotiation.

This step can be resource-intensive, but it is where training translates into measurable business impact. For example, consistent use of a discovery framework during client calls demonstrates that skills taught in training are being applied in practice. Manager coaching sessions and peer reviews also provide valuable evidence of behavioral adoption.

Step 5: Link Training To Business Outcomes

Behavioral adoption must connect to business results for training to be considered effective. This involves analyzing whether changes in sales activity are contributing to improvements in outcomes such as win rates, deal velocity, or customer satisfaction.

The challenge is isolating training impact from other influences like product updates or marketing campaigns.

One practical approach is to compare results between trained and untrained groups. If trained reps show greater improvements in the same period, it becomes easier to attribute gains to the program.

This linkage is vital for demonstrating to executives that training delivers more than good intentions and produces measurable returns.

Step 6: Calculate ROI Where Applicable

Return on investment provides the financial lens executives often require. The Phillips ROI Model is a widely recognized approach, where ROI is calculated by comparing the benefits of training with its costs. Benefits may include increased revenue, reduced employee turnover, or faster onboarding, depending on the program’s objectives.

Even approximate ROI calculations can be persuasive. The basic formula for measuring training ROI is:

ROI = ((Training Benefits − Training Costs) ÷ Training Costs) × 100

For instance, if a sales training program costs $50,000 and generates $200,000 in additional closed deals, the ROI would be ((200,000 − 50,000) ÷ 50,000) × 100 = 300%.

Demonstrating that training contributes to measurable revenue gains helps leadership evaluate enablement initiatives alongside other strategic business investments.

Step 7: Report Insights and Optimize Training Programs

Evaluation should not stop at reporting numbers. The final step is sharing insights with stakeholders and using the findings to improve future programs. Reports should highlight both successes and opportunities for adjustment.

For example, if training improved product knowledge but had little effect on negotiation outcomes, future programs might need more emphasis on applied selling techniques.

This stage transforms measurement from a one-time audit into a continuous improvement cycle. By regularly analyzing results and refining content, organizations ensure that sales training evolves alongside business needs and market conditions.

Platforms like Everstage make this process easier by connecting sales performance data directly to compensation outcomes. With automated reporting and transparent insights, leaders can quickly see how training translates into quota attainment and payout fairness, making optimization both faster and more effective.

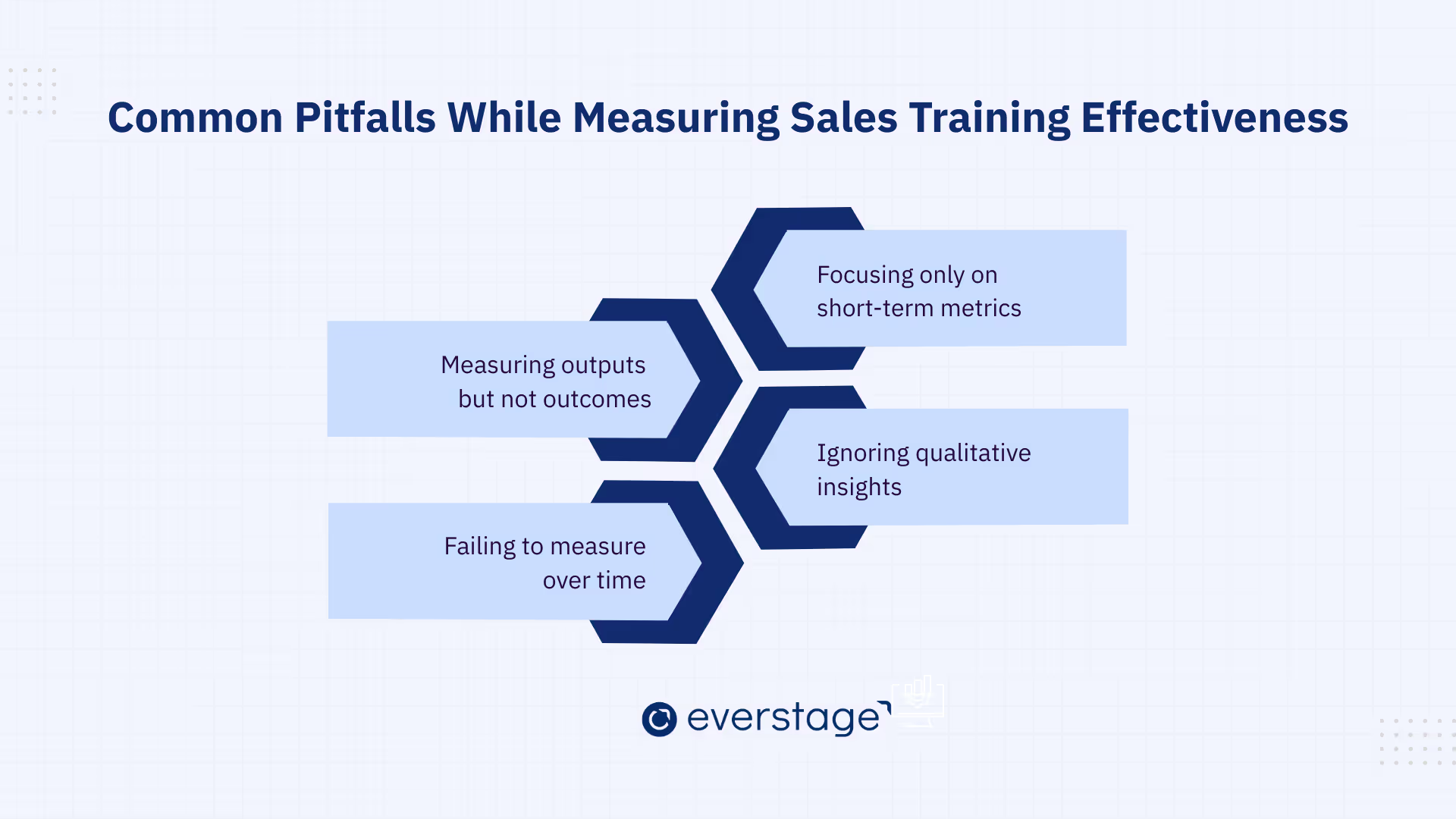

Common Pitfalls while Measuring Sales Training Effectiveness

Recognizing and addressing common pitfalls while measuring sales training effectiveness ensures that training investments are judged fairly and improve over time.

Focusing Only On Short-Term Metrics

Completion rates, quiz scores, and immediate feedback surveys are easy to collect, which is why many organizations stop here. These metrics only reveal whether employees attended and understood the material, not whether they can apply it.

A training session might receive high satisfaction ratings, yet months later, sales numbers remain unchanged. To avoid this trap, short-term data should always be paired with long-term indicators such as revenue growth, quota attainment, or customer satisfaction scores.

This ensures leaders see both the immediate uptake of learning and its eventual business impact.

Measuring Outputs But Not Outcomes

Tracking the number of calls made, demos booked, or proposals sent shows productivity but does not indicate whether the training improved results. For instance, a salesperson may double their call volume without improving their close rate if they are not applying the right techniques.

Outcomes, such as higher win ratios or increased deal value, provide a clearer measure of effectiveness. The focus should shift from counting activity to evaluating whether those activities drive performance gains.

Ignoring Qualitative Insights

Quantitative data can show trends but rarely explain the reasons behind them. If win rates stagnate despite strong participation in training, metrics alone will not reveal whether the issue lies in poor content retention, lack of managerial reinforcement, or customer resistance.

Qualitative insights from post-training surveys, coaching sessions, and customer feedback fill this gap. They help explain why certain behaviors are or are not being adopted. Without this context, organizations risk misdiagnosing problems and making ineffective adjustments to training programs.

Failing To Measure Over Time

Training impact rarely appears immediately. Skills may take weeks to apply consistently, and measurable outcomes such as revenue or customer satisfaction often lag further behind. Relying on one-time assessments conducted right after training creates a misleading view of effectiveness.

The better approach is to measure at multiple intervals, such as 30 days, 90 days, and six months post-training. This phased evaluation captures both the initial adoption of skills and the sustained impact on performance, giving leaders a more accurate picture of long-term effectiveness.

By addressing these pitfalls, companies can ensure their measurement strategy captures both the immediate and lasting effects of training, making it easier to refine programs and demonstrate their real contribution to business outcomes.

Conclusion

The biggest blind spot in sales training is the failure to prove whether it works. Too many organizations run polished programs only to realize months later that nothing has changed. Revenue is flat, deals still stall, and reps slip back into old habits. That disconnect erodes confidence, wastes budget, and leaves leaders guessing instead of knowing.

The companies that rise above this trap treat measurement as a growth lever, not a postscript. They connect training data directly to performance outcomes, linking learning to productivity, quota attainment, and deal velocity. This allows leaders to see which programs truly move the needle and where enablement needs to evolve.

This mindset transforms sales training from a soft investment into a strategic driver of effectiveness. Instead of debating ROI, leadership sees evidence that better-trained reps close more deals, ramp faster, and deliver stronger customer experiences.

With a unified data foundation to measure training impact and a compensation engine that reinforces winning behaviors, Everstage helps you close the loop on sales performance.

Everstage Planning centralizes CRM, HRIS, and productivity data to give sales leaders a clear view of what drives results, while Everstage incentives automates fair, transparent payouts linked to those outcomes. Together, they turn insights into action and performance into growth.

Book a demo with Everstage to see how integrated planning and incentives drive measurable sales effectiveness.

Frequently Asked Questions

What is the best way to measure sales training effectiveness?

The Kirkpatrick model, combined with ROI analysis, is the most comprehensive way to measure sales training effectiveness. This approach evaluates not only immediate learning and behavior changes but also connects training impact directly to business outcomes such as revenue growth and productivity.

How long should you measure training impact after completion?

Sales training effectiveness should be measured for at least 3–6 months after completion. This period allows time to capture behavior adoption in the field and evaluate its influence on key business outcomes like win rates and customer satisfaction.

Which is better: Kirkpatrick or Phillips ROI model?

The Kirkpatrick model provides a strong foundation by assessing reaction, learning, behavior, and results. The Phillips ROI Model adds a crucial financial dimension, calculating return on investment to help executives justify training costs. Many organizations use both, starting with Kirkpatrick and extending into Phillips for ROI justification.

Can small businesses measure training effectiveness without advanced tools?

Yes, small businesses can measure training effectiveness without advanced tools. Simple methods like post-training surveys, CRM data analysis, and tracking sales KPIs such as win rates and quota attainment provide meaningful insights into training impact.

What are the top metrics every sales leader should track?

Key metrics for measuring sales training effectiveness include engagement, knowledge retention, win rates, revenue impact, and ramp-up time. Together, these metrics highlight both short-term learning adoption and long-term business performance improvements.

.avif)

.avif)

.avif)